SEARCH BY KEYWORDS, SUBJECT, PEOPLE AND HIT ENTER

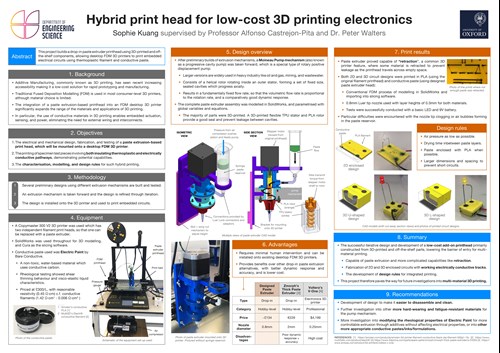

Supporting document for Sophie Kuang This project centres around the design, development, and testing of a paste extruder printhead which can be mounted onto an existing desktop FDM 3D printer, focusing on its application in printing embedded circuits using conductive paste and thermoplastic filament.

The main objectives of this project were: 1) The electrical and mechanical design, fabrication, and testing of a paste extrusion-based print head, which will be mounted onto a desktop FDM 3D printer. 2) The printing of specimen test pieces involving both insulating thermoplastic and electrically conductive pathways, demonstrating potential capabilities. 3) The characterisation, modelling, and design rules for such hybrid printing. which were achieved through, firstly, the preliminary testing of several extrusion mechanisms; then, the further iterative development of a selected extrusion mechanism; and finally, the installation of this extruder onto a 3D printer and printing of embedded circuits.

The final paste extruder design uses a Moineau pump, otherwise known as a progressive cavity pump. It consists of a helical rotor rotating inside an outer stator, the space between which form a set of fixed size, sealed cavities which progress axially. This provides a volume flow rate proportional to the rate of rotation and a comparatively good dynamic response compared to other mechanisms. It also later proved capable of retraction, a common feature in 3D printers where some material is retracted to prevent leakage as the printhead travels across empty space.

SolidWorks was used to model and parametrise the assembly using global variables and equations. Most of the parts were 3D-printed or otherwise off-the-shelf components. In particular, the rotor was 3D-printed in PLA; the stator, in flexible TPU, to provide a good seal and prevent leakage between the cavities. The paste extruder was then mounted onto a 3D printer and was used to successfully print both 2D and 3D circuit designs using conductive paste and PLA.

Tests were conducted with a basic LED and 9V battery, and a set of design rules created for integrated printing. This paste extruder thus provides benefits over other drop-in alternatives, with better dynamic response and accuracy, as well as being capable of more complicated capabilities like retraction. Minimal human intervention is required, and it can be installed onto existing desktop FDM 3D printers easily as its stepper motor is sourced from the original print head. It is also lower cost, with a total costing of just over £130.

This project therefore paves the way for future investigations into multi-material 3D printing. It proves that it is possible to use 3D-printed parts and off-the-shelf components in the build of a lower cost and more capable paste extruder. This lowers the barrier of entry for the use of conductive materials in 3D printing, enabling embedded actuation, sensing, and power, without external wiring or interconnects, which has potential applications in wearable technology, sensors, and robotics.

Further investigation into modifying the rheological properties of the conductive paste used without affecting its electrical properties, or other more appropriate formulations, is recommended.

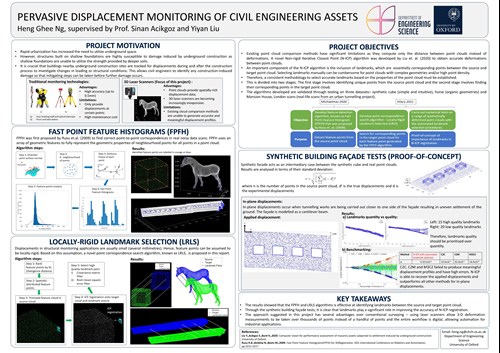

Project Motivation: Rapid urbanization has increased the need to utilize underground space. However, underground construction changes the soil influence and support conditions of nearby buildings, resulting in changes in structural or loading conditions. Therefore, it is vital that buildings nearby underground construction sites are monitored so that any construction-induced movements can be detected early-on to allow mitigating steps to be taken before further damage progresses.

Existing monitoring technologies are either invasive and/or do not provide sufficient displacement data. 3D laser scanners, a non-invasive technology that is able to produce spatially rich point clouds, are gaining popularity due to the increasing availability of inexpensive laser scanners. However, existing cloud comparison methods are unable to produce meaningful and accurate displacement profiles. The optimal step N-ICP registration shows great potential as it was proved by Liu et. al. (2020) to be able to recover displacements accurately.

Project Objectives: The performance of N-ICP can be enhanced through the inclusion of landmark pairs, which are essentially corresponding points between the source (undeformed) and target (deformed) point clouds. Landmarks help N-ICP with initial alignment and guidance throughout the process. This project aims to automate landmarks selection as it is extremely difficult to manually select landmarks for buildings with complex architecture and scans with high point densities.

The proposed method consists of two stages. The first stage is known as feature detection and involves identifying a set of unique points from the source point cloud. The second stage is point correspondence search, where each feature point identified in the first stage is matched to a corresponding point in the target point cloud. For the first stage, the Fast Point Feature Histograms (FPFH) algorithm proposed by Rusu et. al. (2009) is implemented with several modifications.

For the second stage, a novel point correspondence search algorithm, Locally-Rigid Landmark Selection (LRLS), is proposed. This algorithm relies on the assumption that feature points are locally-rigid for the range of displacements common in buildings. Impact and Takeaway: Validation tests were carried out on three datasets – a synthetic cube (simple and intuitive), horse point cloud (organic geometries) and Mansion House, London scans (real life scan from an urban tunnelling project).

The results show that FPFH combined with LRLS successfully identified accurate landmark pairs. Tests were also carried out on a synthetic building façade that acts as an intermediary case between the synthetic cube problem and real point clouds. The tests managed to establish that including landmarks in N- ICP increases N-ICP accuracy for small displacements. The tests also showed the importance of well-distributed landmarks and that landmark accuracy should be prioritised over landmark quantity. Lastly. the tests show that N-ICP outperforms existing cloud comparison methods (C2C, C2M and M3C2).

Compared to conventional surveying methods, the approach suggested in this project has several advantages: a) Using laser scanners allow 3-D deformation measurements to be computed over thousands of points instead of just a handful of points. b) The entire workflow is digital, allowing automation for industrial applications.

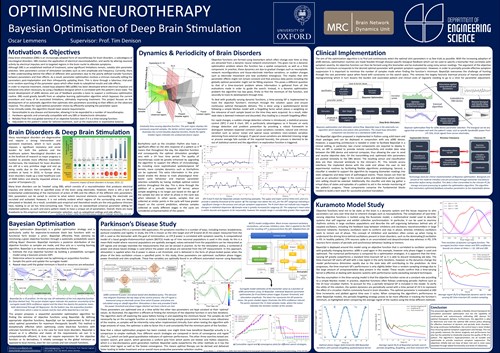

Deep brain stimulation (DBS) is emerging as an effective therapy for a growing number of neurological disorders, such as essential tremor, Parkinson's disease and epilepsy. Although DBS is an established method of treatment, some significant limitations remain, such as stimulation parameter selection. This is primarily due to the limited understanding of how electrical impulses interact with surrounding neurons.

To date, DBS parameter optimisation has been performed manually by a clinician and involves an extensive searching of the space through trial-and-error which can be hard to do without triggering side-effects. This method is limited as it is too time-consuming to test each of the many parameter combinations in the large search space. The therapeutic benefit attainable is therefore heavily dependent on the intuitive expertise of the clinician.

When treating novel disorders, the current heuristic is to simply copy across settings from other diseases instead of tortuously searching the space. Furthermore, parameters are infrequently updated and thus can't account for possible stimulation response changes over time, leading to suboptimal therapy until the next in-clinic adjustment.

Despite innovation in the field, new closed-loop adaptive DBS (aDBS) devices still adhere to this heuristic parameter tuning procedure. The lack of parameter optimisation frameworks and guidance poses a limitation for current DBS therapies, and results in an ad hoc empirical task.

However, the development of aDBS devices and use of feedback biosignals now provide observability of the system and a basis to support a continuous optimisation routine. In order to improve therapeutic efficacy, an adaptive algorithm that optimises stimulation parameters according to their effects on the observed response is proposed.

This project introduces an autonomous infrastructure into the field of neurotherapy, which replaces laborious manual parameter tuning, and obtains optimal settings for improved patient treatment. The basis of this infrastructure is a novel Bayesian optimisation algorithm that utilises biosignal feedback in order to efficiently search a parameter space and rapidly select optimal stimulation parameters for DBS devices. The presented algorithm provides a flexible clinical framework for stimulation parameter optimisation as it is hardware agnostic, and adaptable to various neurological disorders and applications.

The algorithm's hyperparameters are also all tunable to provide maximum symptom suppression. Furthermore, the algorithm is able to determine and track the optimal DBS settings allowing for effective treatment of dynamic brain disorders. The introduction of an advanced smooth forgetting procedure facilitated by a spatiotemporal kernel enables for superior results when dealing with dynamic systems, often seen in neural networks. The algorithm's performance is further enhanced through the addition of an innovative periodic-forgetting kernel to account for the biorhythmic behaviour such as circadian rhythms.

Furthermore, the statistical process control scheme implemented is able to monitor and address sudden changes that can arise. These elements all ensure an accurate surrogate function is known and that the true global minimum is continuously selected for optimal therapy. Overall, this versatile optimisation routine is hardware agnostic and generalisable for a wide range of disorders and applications, and thus provides a powerful state-of-the-art clinical framework for stimulation parameter optimisation.

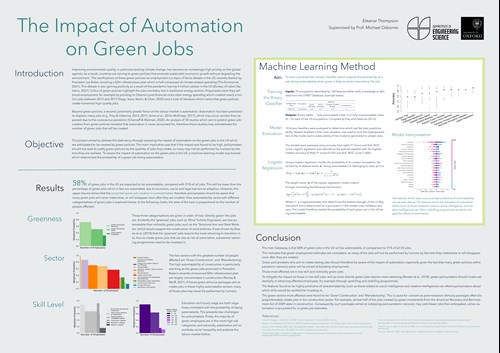

The Impact of Automation on Green Jobs

Summary

This project aimed to estimate the impact of automation on green jobs in the US by determining the probability that they will be automatable. Trends of automatability within green jobs were also explored, as were the features which were highly predictive of automatability, to provide meaningful insight for policymakers. Many existing estimates of the employment impacts from green policies anticipate the creation of green jobs.

This project was motivated by the omission of the effect of automation in such estimates, despite the mounting evidence that automation will continue to displace a significant number of jobs. It is predicted that 58% of green jobs will be automatable, in comparison to 51% of all jobs in the US. This result was obtained through training a probabilistic classifier which outputs the probability of a job being automatable when given a feature vector describing the job.

The probabilities were multiplied with the number of employees in each occupation to yield the expected number of automatable green jobs. Ten classifiers were evaluated on the training data compiled by Frey and Osborne (2017), which was composed of 70 occupations labelled as either fully automatable or fully unautomatable.

The classifiers were evaluated using k-fold nested cross-validation to avoid bias in the hyperparameter tuning and model selection process. Logistic regression was selected as the optimal classifier with the highest median accuracy (0.964), F1 score (0.933) and AUC-ROC curve (1.000) when tested on unseen portions of the training data. As employment impacts are a major factor when determining green policies, the result of 58% demonstrates that the impact of automation should be considered to ensure that green policies do not promise the creation of green jobs that will not be performed by humans by the time they are realised.

The automatability of green jobs is shown to be negatively correlated with the skill level of a job, as well as how ‘directly green’ the job is. The most affected sectors are found to be ‘Green Construction’ and ‘Manufacturing’. This should be of particular concern to policymakers as post-recession green stimulus packages have in the past created a disproportionate number of jobs in this sector.

Consequently, such packages aimed at catalysing post-pandemic recovery may yield fewer jobs than anticipated, unless automation is accounted for in green job estimates. The features found to be highly predictive of unautomatability (such as those related to social intelligence and creative intelligence) can inform policymakers about which skills would be worthwhile investing

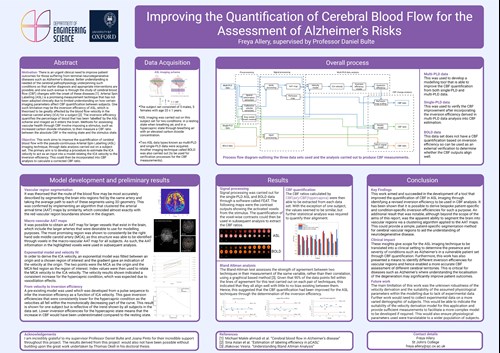

Improving the Quantification of Cerebral Blood Flow for the Assessment of Alzheimer’s Risks

Motivation

There is a critical need to improve the diagnosis, prognosis, and monitoring of terminal neurodgenerative diseases such as Alzheimer's disease (AD).

The premise of this project is to define a method to better the quantification of cerebral blood flow (CBF) from pseudo-continuous Arterial Spin Labelling (ASL) imaging through analysis conducted on a pre-existing data set. Improving this technique would have a tangible impact on the understanding of cerebral pathophysiology in AD where the change in CBF in response to a stimulus may be a significant biomarker to aid the diagnosis and treatment of this condition.

An accurate quantification of this parameter could present an avenue for earlier diagnosis of such diseases as well as providing a platform for the development of treatments that target this specific biomarker. Pseudo-continuous ASL is subject to several uncertainties, with a significant one being the inversion efficiency parameter associated with this technique. This parameter quantifies the percentage of blood that has been 'labelled' by the ASL scheme and imaged as it enters the brain and is theorised to be greatly affected by the blood velocity in the internal carotid artery (ICA) for a subject.

As such, the primary objective of this work is to develop a method to derive an estimate for the ICA velocity and the corresponding inversion efficiency that accounts for both subject and condition variability to then be incorporated into analysis which gives a revised CBF value.

Methods

Methods for assessing vascular health through CBF involve imposing a stimulus to measure the CBF ratio of the CBF in the resting state to the stimulus state. The data acquired for this project included 5 males, 5 females (age 20 ± 1 years). ASL imaging was carried out on this subject set for two conditions: in a resting state when breathing air, and in a hypercapnic stimulus state through breathing air with an elevated carbon dioxide concentration. Modelling was carried out using MATLAB and image analysis software to produce an estimate for the ICA velocity and this was incorporated into a further model that gave inversion efficiency as a function of ICA velocity. This inversion efficiency was then able to be incorporated into CBF analysis to output a corrected CBF quantification.

Conclusions and clinical impact

This work aimed and succeeded in the development of a tool that improved the quantification of CBF in pCASL imaging through identifying a revised inversion efficiency to be used in CBF analysis. These insights give scope for the ASL imaging technique to be translated into a clinical setting to determine the presence and severity of conditions such as Alzheimer's in a vulnerable patient set through CBF quantification. Furthermore, this work has also presented a means to identify different inversion efficiencies for vascular regions and hence enabled a more accurate CBF assessment of different cerebral territories. This is critical for diseases such as Alzheimer's where understanding the localisation of the degeneration may significantly improve patient outcomes. t

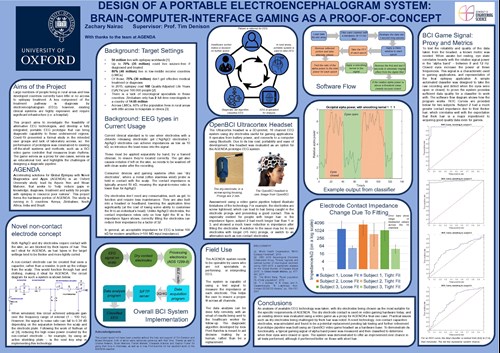

4YP Poster Competition Supplementary Statement – Zachary Nairac

The aim of AGENDA is to design and build a portable, low cost EEG system for use in remote areas and developing countries. Epilepsy is most prevalent in these countries, but access to diagnosis and treatment is extremely limited. Since common epilepsy drugs are very cheap, a key roadblock in alleviating the effects of epilepsy there is the lack of EEG capability.

This project, as part of AGENDA, will have a huge impact if it helps produces a diagnostically useful device that can be deployed into these settings. In this project, I focused on evaluating different options for EEG measurement and implementing an educational tool, that produces input signals for a game, based on an existing gaming headset. This educational system can be used to teach about the brain and epilepsy, as well as serve as a development platform for the clinical system.

A big issue with doing this project this year was the lack of data I could acquire even using the BCI video game system, due to social distancing concerns. For example, I was fortunate to be living in a house with someone from Sudan, as those recordings underlined the magnitude of the issue the gaming headset faces with thicker hair. Had I been living by myself, it is possible I wouldn’t have known until we sent a prototype out for testing. This is an issue with all dry electrodes, but is a particular problem for this headset as a) the prongs are only 2 mm long, and b) the tightening is done via a screw, which twirls hair up like spaghetti around a fork.

A future design could address both problems. 5 mm long dry electrodes are available, and if a straight-push ratcheting type system was used to tighten the twisting would be avoided. However, these would require a redesign of the headset, which was not possible in the timeframe.

A key lesson from testing the system, even on the small number of people that were available, is the large variation in head shape. On top of making it hard to ensure a standardised fit, the adjustment is done by hand and so is heavily dependent on the user.

In the poster, I mentioned how the inbuilt impedance check can ensure a decent connection, but it’s equally important to employ a rigorous application protocol to maximise contact quality and repeatability. When analysing the alpha band results from the educational tool, there is a clear distinction between people with different hair length (as predicted by the impedance).

However, this isn’t the only variable affecting the performance. Strong alpha activity requires the person to “clear their mind”; depending on stress, pain or other factors, this is more difficult for some people. Existing competitive EEG-based games rely on this difference.

The impact of this variation will need to be understood for the clinical system’s design. To complete the prototype pipeline, a functioning game input device was developed based on a battery-powered EEG headset and a Raspberry Pi microcomputer. The analysis program has the capacity to function both remotely and locally, and was shown to classify well when the subject’s eyes were open/closed during recording. .

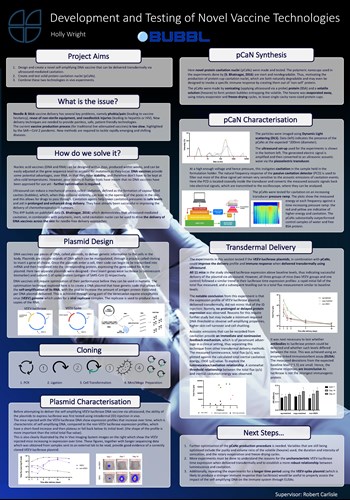

Supporting Information for the 4YP Poster Competition Project Title: Development and Testing of Novel Vaccine Technologies

The world will continue to need a safe, widely accessible, and quickly adaptable option to the current vaccines available, long after the SARS-¬CoV-¬2 pandemic. In the face of mounting vaccine hesitancy, ideally these needs will be met by painless, patient¬-friendly, needle free technologies.

Hence, the aim of the project was:

• To design and create a novel DNA plasmid encoding for self¬-amplifying mRNA (denoted VEEV) and test the transdermal delivery of this plasmid using ultrasound-mediated cavitation.

This project combines the recent advancements in two fields that are at the forefront of medical research. The first being cavitation nuclei, which help lower cavitation pressures to safe levels, and aid in prolonged and enhanced drug delivery. Current biomedical applications of this technology include improving the delivery of chemothera¬peutic agents into and throughout tumours and provide a means of a timely and on¬ target release of cancer drugs.

This project involved the creation and testing of novel protein cavitation nuclei, pCaNs, which are biodegradable and immunostimulant. Characterisation experiments confirmed the presence of the desired nano¬sized cavitation particles. However, further optimisation of the pCaNs production procedure is needed to improve the purity of the sample and to ensure reproducible cavitation at operating pressures. The second rapidly evolving field explored in this project is the use of nucleic acid vaccines. DNA vaccines offer a more stable alternative to RNA vaccines which is crucial when considering that infectious disease is most prevalent in countries that lack the required cold-chain infrastructure for RNA vaccines.

DNA vaccines still require optimisation of their performance before they can be approved for use in humans. The optimisation technique explored here is to create a DNA plasmid that allows for the self-amplification of its RNA, with the goal to increase the amount of antigen protein translated per DNA plasmid delivered. The intradermal (ID) experiment gave rise to a luciferase expression profile that is characteristic of a self¬-amplifying vaccine.

Furthermore, the delayed onset of protein expression seen is conceptually exciting in its potential applications to vaccine dosage. Currently, the approved RNA vaccines (Moderna, BioNTech) require two doses, which is logistically difficult, more expensive, and time--consuming. With a delayed onset of expression, it may be possible to incorporate both doses required into a single delivery.

The final experiments explore the possibility of a pain-¬free vaccine technique that is optimised using cavitation nuclei and self¬-amplifying technology. IVIS images confirmed that the self--amplifying DNA was successfully delivered in vivo through a mea¬surable luciferase signal. However, following transdermal delivery, the luciferase time-expression profile did not demonstrate the expected self¬-amplifying curve shape characterised by increased and prolonged expression. Further work is needed to understand the mechanisms behind this result.

Finally, the ELISAs, carried out to assess the corresponding immune response in the mice post¬-delivery, showed no notable deviations from the expected baseline level. Therefore, repeating this experiment with a more immunogenic protein, such as the VEEV¬-Spike plasmid, should lead to more conclusive results.